In the realm of Generative AI, we’ve become accustomed to a particular kind of storytelling. We talk about models that can “reason,” display “intelligence,” and utilize “neural networks.” These descriptions often come paired with sleek images of human brains lit up with electric connections or other human-like metaphors. It’s a compelling narrative, but one that misses a fundamental truth: what’s actually happening is mathematics—complex, fascinating, and utterly inhuman mathematics.

Beyond the Anthropomorphic Metaphors

When we describe an AI model as “thinking” or “deciding,” we’re applying human frameworks to computational processes that bear little resemblance to human cognition. The reality is far more mechanical: matrices multiplying, vectors transforming, and probabilities calculating—all at scales and speeds that defy human comparison.

These systems don’t possess understanding or reasoning in any human sense. They’re statistical pattern-matching engines, operating on principles of linear algebra, calculus, and probability theory. They process enormous datasets not through contemplation but through mathematical operations optimized for parallel computation.

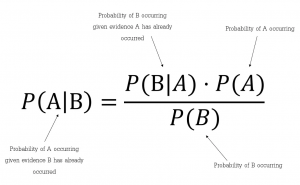

The Mathematical Reality

What’s actually happening when a generative AI produces text, images, or other content?

At its core, these systems perform a sophisticated form of statistical analysis. Language models like GPT or Claude process text as mathematical tokens, calculating probabilities for what should come next based on patterns identified during training. Image generators like DALL-E or Midjourney navigate high-dimensional latent spaces defined by mathematical transformations of visual data.

calculating probabilities for what should come next based on patterns identified during training. Image generators like DALL-E or Midjourney navigate high-dimensional latent spaces defined by mathematical transformations of visual data.

The “neural” in neural networks refers to a mathematical architecture inspired by—but functionally quite different from—biological neurons. The resemblance ends at the basic concept of inputs, weights, and outputs. The actual implementation exists purely in the realm of linear algebra and calculus.

Why the Distinction Matters

This isn’t merely semantic nitpicking. The anthropomorphic framing of AI creates misconceptions about capabilities and limitations. When we speak of AI “hallucinating,” we imply intentionality where none exists. These systems don’t fabricate or imagine—they produce outputs that mathematically align with their training data patterns, regardless of factual accuracy.

Understanding the mathematical nature of AI helps us better contextualize both its impressive capabilities and fundamental limitations. These systems excel at tasks amenable to statistical analysis but struggle with causal reasoning, contextual understanding, and adapting to scenarios outside their training distribution.

Moving Forward Clearly

As we continue developing and deploying generative AI systems, precision in how we discuss them matters. Clear language about what these systems actually do—the mathematical operations they perform, the statistical patterns they identify—leads to better design, more responsible implementation, and more realistic expectations.

The next time you see an AI breakthrough described with brain imagery or cognitive terms, remember what’s behind the curtain: elegantly arranged mathematics, executed with remarkable efficiency, but mathematics nonetheless. No consciousness, no understanding—just calculations, transformations, and probabilities combining to produce results that only appear magical until we examine the math.

Interested in a deeper dive?

- Understanding What is Probability Theory in AI: A Simple Guide – Bluesky Digital Assets

- Demystifying Neural Networks: A Beginner’s Guide – Midokura.

- Pretending to Think: The Illusion of Deep Research | by Mark C. Marino | Jul, 2025 | Medium

A nice summary, but missing discussion via the philosophy of mind. Probably the dominant theory of mind is Functionalism, which defines mental processes and capabilities in terms of their functional role. Given this, “Intelligence”, “reasoning”, “understanding”, etc. are understood solely in functional terms. That is, if a system functions as if it understands, it does understand, *literally*. It is important, then, to draw a distinction between ‘intelligence’ and ‘consciousness’ (as the qualitative aspect of experience / mental phenomena). Functionalists argue there is no other way to say what it means to, for example, understand something. Further, there is a lot of research on brains in which researchers talk in similar ways to how the above describes AI model operations – e.g., trying to understand what ‘algorithms’ run on cortical columns.

No doubt much of this remains open. But it may just be a human bias to say these models are not reasoning or being creative or intelligent – especially given that we are very far from knowing how our brains produce such things.

Hi Michael –

Thanks for your thoughtful response. That’s an interesting lens to use to evaluate intelligence and from this standpoint you certainly could make an argument that if it’s producing the right outputs, it must be a form of intelligence. But there are still points of tension around that.

Even it mimics human reasoning, it doesn’t necessarily imply that it has the same *kind* of intelligence. Leaning on images of human brains and metaphors linked to human reasoning obscures important differences between human and machine intelligence, for example the experience gained from embodied interaction with the physical world.

It’s really fascinating to hear that human brain researchers talk about ‘algorithms’. It almost sounds like they’re doing the opposite – ascribing machine attributes based on outputs. This is going to be an interesting debate to follow.